Students at The New School began this semester with a new addition to their syllabi and a potentially rude awakening about the future of their careers and education. TNS’ academic integrity policy now defines academic dishonesty as the “unauthorized use of artificial intelligence tools to generate ideas, images, art/design, audio, video, code, or text for any portions of work,” among other points.

But what it fails to define is how students and faculty are meant to understand a world where academics, artificial intelligence, and the future of our careers are now intrinsically linked.

During my first week this semester, I took note of my professor’s interpretations of the new policy. Some said they preferred we never type the words “ChatGPT” into our browsers and others told us they encouraged the use of AI as long as there was transparency about it. I ended up with some level of moral whiplash.

Conversations with my classmates and friends revealed many students felt a similar confusion. Nobody knew where they stood. Nobody knew if it was truly alright to plug their 50 pages of Hegel into ChatGPT to generate an illuminating contribution for a Socratic seminar.

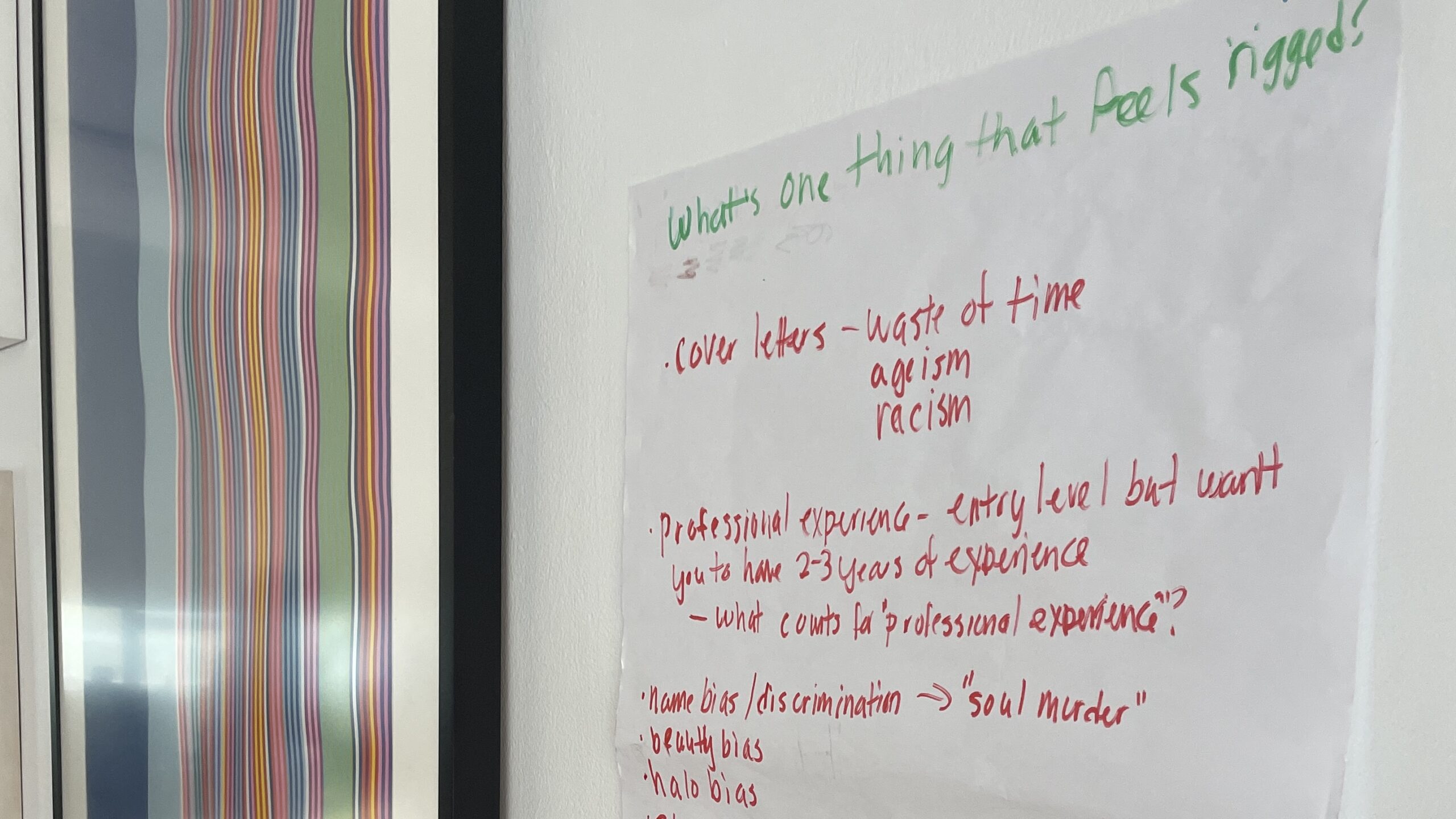

Students and faculty deserve more guidelines about the academic integrity policy and how we are meant to include generative AI in our schoolwork. The creation of these guidelines only comes through communication— promoting conversation about the inner workings of AI, the ethical considerations, and its potential implications on our creative practices.

As an institution, it is our obligation to ensure open conversations between students and faculty take place in the classroom to reframe our fears and even our excitement about the boom of generative AI.

Lisa Romeo, the assistant director of the University Learning Center told the Free Press that “by the mid-spring semester it was not uncommon for students to be discussing it [AI] in a session with a tutor.”

Tools like ChatGPT have become an increasing resource for students to navigate academic challenges such as dissecting complicated texts or getting frameworks for their essays. Services offered by the University Learning Center are a good starting point to help students examine AI-generated content and see how it can be useful to their learning goals.

The primary issue is that students and faculty aren’t sure where they stand on the ethics of AI in the classroom. Education on the inner workings of AI is where we can fill these gaps in understanding.

There is still some common consensus among students that AI tools such as ChatGPT, and text-to-image generators like DALL-E and Midjourney aren’t effective replacements for human intelligence in academic and creative work.

Meg Rowan, a senior literary studies major at Eugene Lang College of Liberal Arts, said, “I know, it’s definitely being used, but as far as I’m aware, my friends don’t really use ChatGPT for anything, or AI, just because we’re all kind of on the side of like, it’s unreliable. And also, just do your own work… and so I’m just kind of morally opposed to it.”

Sylvia Roussis, a junior screen studies major at Lang, even mentioned her professor’s take on the policy, saying, “I had a professor say, ‘If you use AI, don’t tell me. But I’ll probably know.’” Roussis also echoed a common sentiment of fear, but not utter hopelessness about the future of AI.

“The idea is still floating in the air whether or not it’s going to be like an ‘Ex Machina’ situation by the time I’m looking for a job. But I’m not exactly jumping to that yet,” Roussis said.

This sentiment of an “Ex Machina situation” stuck with me. It feels as if AI is already advancing into something bigger than the human conglomerate: a big, scary army of robots making strides to replace the careers of writers.

The truth is, if we take a look at the inner workings of generative AI software, we can understand its inherent limitations and demystify the confusion.

Mark Bechtel, an assistant professor at Parsons School of Design, was the co-professor of the graduate lecture course “AI For Research and Practice” in spring 2023. Bechtel’s class, taught alongside Professor Jeongki Lim, centered around how AI could be used in creative practices to offer insights beyond traditional research methods.

“I think what my students in that class were most excited about was not necessarily that they would ever apply any of the tools that we were learning that got a bit more advanced in professional practice, but now they had a very clear understanding of, well, this is actually what it can do,” said Bechtel.

In showing students the limitations of AI, how tools like ChatGPT only have access to information on the internet, or how text-to-image generators synthesize images from large data sets and interpolate across categories of images in these data sets, the threat of an “Ex Machina situation” feels less likely. Through open conversation, students recognize how algorithms are constructed. They feel control over the future and AI becomes a source of inspiration.

Bechtel explained that the output of generative AI is a derivative of information that already exists. “There’s a really strong temptation to anthropomorphize the technology… when students believe that they are collaborating with something rather than, more accurately, recognizing that it’s actually just a tool that you have varying degrees of control over,” said Bechtel.

He also mentioned that 100 years ago, the American composer John Philip Sousa predicted that the recording of music would lead to the demise of music itself.

Aanya Arora, a junior integrative design major at Parsons, offered a similar insight when discussing the usage of text-to-image generators and concerns about plagiarizing artists.

“With the invention of the camera, people were worried that painting and fine arts would kind of see a decline. But it didn’t really decline. It’s still a big part of art today. It can go many ways. It could be like the camera where you’re using it as a tool, but it’s a whole separate thing. Or, it can infiltrate the art world and take over,” she said.

It appears that at the advent of every technological burgeoning, there is a reckoning that follows. Fear is a natural part of innovation, and sometimes, but not always, our fears are proven wrong.

The only way to get in front of artificial intelligence is to understand what’s behind it. Then perhaps, we can see AI as a tool that only talks back because we program it to

Leave a Reply